Estimating the parameters of an HMM (called “training the model”) will come a little later. I think it’s better to understand the recognition algorithm first, because it is simpler.

Reading

Jurafsky & Martin – Section 9.5 – The lexicon and language model

Simply mentions the lexicon and language model and refers the reader to other chapters.

Jurafsky & Martin – Section 9.6 – Search and Decoding

Important material on efficiently computing the combined likelihood of the acoustic model multiplied by the probability of the language model.

Conditional probability & Bayes' rule

We can combine probabilistic models of our prior beliefs, and of the signal being classified.

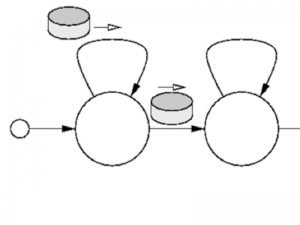

Computing P(O|W) with an HMM

This term is called the "likelihood" and is the conditional probability that a particular HMM (W) generated the given observation sequence (O).

Computing P(W) with a language model

This is the "prior" probability of W. It doesn't involve the observation sequence O, so can be computed without looking at the speech to be recognised.