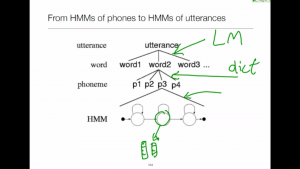

Another great thing about token passing is that it makes the extension to connected speech almost trivial.

Reading

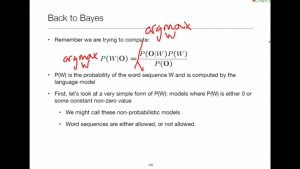

Jurafsky & Martin – Section 9.6 – Search and Decoding

Important material on efficiently computing the combined likelihood of the acoustic model multiplied by the probability of the language model.

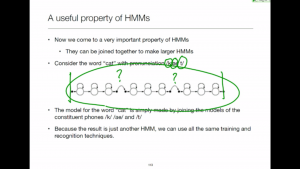

Sub-word units

We won't generally have examples of every word in the training data, so will need to use sub-word units.