This term is called the "likelihood" and is the conditional probability that a particular HMM (W) generated the given observation sequence (O).

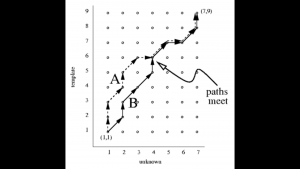

Relating DTW to the HMM

The template in DTW is now replaced by a model, but otherwise the methods are conceptually very similar.

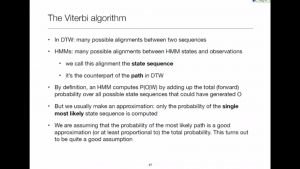

The Viterbi algorithm is dynamic programming

When we apply dynamic programming to an HMM, we call it the Viterbi algorithm.

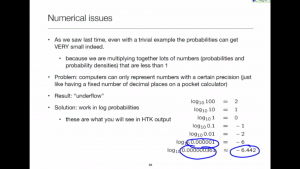

Numerical issues

Probabilities can get very small, so we must take care when storing them, and also when computing with them.

The Viterbi criterion

At the core of the Viterbi algorithm is this simple dynamic programming operation: discard all paths that cannot win.

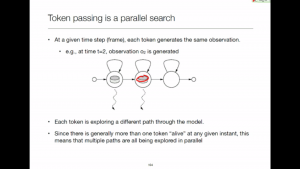

Token passing

This is a really nice way to understand, and to implement, dynamic programming in HMMs.

Pruning

Even with the approximation to P(O|W) made by the Viterbi algorithm, recognition might be too slow. So, we must speed things up further.