Module status: ready

We will now use a Neural Network to replace the regression tree in HMM synthesis, and will keep an HMM-like mechanism to take care of the sequencing part of the problem.

Download the slides for the module 8 videos

Total video to watch in this section: 40 minutes

This video just has a plain transcript, not time-aligned to the videoTHIS IS AN UNCORRECTED AUTOMATIC TRANSCRIPT. IT MAY BE CORRECTED LATER IF TIME PERMITS.this modules and introduction to how we can do speech synthesis using neural networks, and I'll stress that word introduction.This is a fast moving field, and so I'm not even going to attempt to describe the state of the art.What I'm going to do is to give you an idea of what a neural network is and how it works, and then going to look in a little bit more detail about how we actually do text to speech with a neural network.That's really a matter of getting the inputs and the output in the right form so that we can use the neural network to perform regression from linguistic features to speak parameters.We'll need to think about things like duration.In order to do that, I'll finish with a very impressionistic and hand wavy description of how we might train in your network using an algorithm called back Propagation.But I won't be using any mathematical details are believing that two readings? Let's do the usual thing off, checking what we need to know before we can proceed.You obviously need to know quite a bit about speak synthesis before you get to this point and in particular, you need to know how Tex Processing works in the front end on what available linguistic features there are.You'll need to have completed the module on H bomb synthesis, where we talked about flattening this rich linguistic specification into just a fanatic sequence where each of those symbols in that sequence is a context dependent phone on all the context that we might need needs to be attached to the phone that includes left and right frenetic context on super segmental things such as property as well as basic positional features.Maybe position in phrase implicit in the hmm method.But becoming explicit in the neural net method is that these features could be further processed and treated as binary is either true or false.That will become clear even more so as we work through the example of how to prepare the inputs to on your network because the imports will have to be numerical in H.Members speak synthesis.The questions in our regression tree queried the linguistic features on DH.Although it was done implicitly, that's really treating those features as binary there, either true or false on.Of course, we need to know something about the typical speed parameters used by Ivo Koda, because for our neural networks in this module that will be the output we will still be driving a vocoder.This idea of representing Ah categorical linguistic feature as a binary vector is very important.On the way to do that is something called a one hot coding that we've already mentioned on will become clear again later in this module that has various names that people use.Sometimes people say one of K or one of n or one of some other letter.I quite like the one heart phrase because it tells me that we've got a binary vector, which is entirely full of zeros except for a single position where there's a one which is equal to on or true, more hot.And that's telling me which category out of a set of possible categories the current sound belongs to.For example, it could represent the current phone was a one out of 45 encoding say so what is the neural network to make the Connexion back to hidden Markov model based speech synthesis? Let's say that a neural network is a regression model like a regression tree.It's very general purpose.We can fit almost any problem into this framework.It's just a matter of getting the input and output into the correct format.In the case of Regression Tree, the way that we need to represent the import is there something that we can query with? Yes, no questions.And that's exactly like saying we need to turn the input into a set of binary features that either true or false more No.Zero.A neural network is very similar.We need to get the input in the right form.It's going to have to be numerical, a vector of numbers.They don't have to be binary.Just a vector of numbers on the output has to be another vector of numbers throughout this module.I'm going to take a very simple idea of neural networks.It's this feed forward architecture.Once you start reading literature, you'll find that there are many, many other possible architectures, and that's a place where you can put some knowledge off the problem into the model by playing with that architecture to reflect, for example, your intuitions about how the outputs depend on the inputs.However, here Let's just use the simple feed forward in your network.Let's define some terms we're going to need to talk about when we talk about neural networks.The building block of any in your network is thie unit.It's sometimes called the Neuron on DH that contains something called an activation function.The activation function relates the output to the input.So some function says that the output equals some function of the import.The activation off a unit is passed down.Some connexions on goes into the input ofthe subsequent units, so we need to look at these connexions.There's one of thumb their directed, so the information flows along.The direction of the arrow and connexions have a parameter is a weight on the way just multiplies the activation off.The unit on DH feeds it to the next unit, so those weights are the parameters of the model.My simple feed forward network is what's called fully connected.Every unit in one layer is connected to all the units in the subsequent layer, so you can see that those weights are going to fit into some simple data structure.In fact, it's just a matrix, so the set of weights that connects one layer to the next layer.The factor matrix on the way that the activations of one layer a fed to the imports of the next layer is just a simple matrix multiplication.We can see that the units are arranged on this diagram in a particular way, and they're arranged into what we call layers some of the layers inside the model and they're called hidden layers on other layers take the imports in the outputs, so information flows through the network in a particular way, and it flows from the input layer through the hidden layers to the output layer.There's the summarise.We represent the input as a numerical vector on DH that's placed on the input layer that's them, propagated through the network by a sequence of matrix multiplication sze on activation functions and eventually arrives at the output layer.On the activations of the output layer are the output of the network.I said that these units, sometimes called neurons, have an activation function in them.So what is a unit on? What does it do? A key idea in neural networks is that we can build up very complex models that might have very large numbers off parameters, say large numbers of weights from very, very simple building blocks.Very simple little operations on the unit is that building block.I'm just going to tell you about a very simple sort of unit.There are more complex forms of unit, and you need to read the literature to find out what they are.He's a very simple unit, and it just does the following.It receives inputs from the preceding layer.Those inputs are simply the activations of units in the previous layer multiplied by the weights on these connexions that they've come down and they all arrive together at the input to this unit, and they simply get summed up so there's a sum so that input is awaited.Some of the activations of the previous layer away to some is just a linear operation, and it could be computed by the matrix multiplication that I talked about before.Importantly, inside each unit is an activation function, and that function must be non linear.If the function was linear, then that would would simply be a sequence of linear operations, and that's just a product of linear operations which itself is just another linear operation.So the network will be doing nothing more than essentially a big matrix multiply.Just be a very simple, linear regression model, not very powerful.We want a non linear regression model, so I need to put a non linearity inside.The unit's on again.There's many, many possible choices of nonlinear function.You need to do the readings to discover what sort of nonlinear activation functions we might use in these units is very often some sort of squashing function.Some sort of s shaped curved something perhaps like a sigmoid or a Tansi.But there are many other possibilities.That's another place where you need to make some design decisions when you're building on your network.What activation functions and most appropriate for the problem that you're trying to solve on the output simply goes out off the unit so the output comes out of the unit.Quite often, that output is called the activation, so to summarise this part, there are many, many choices off activation function, but they need to be non linear.Otherwise, the entire network just reduces to a big linear operation.There's no point having all of those layers.So what are all those layers about? Why would you have multiple layers? Well, there's lots of ways to describe what in your network is doing, but here we're talking about regression regression from some input representation.Linguistic features expressed is a vector of numbers to some output representation.Just a speech parameters for Arvo Coda on DH.We can think of the network as doing this regression in a sequence off simpler regressions.So each layer of weights plus units applying non in operations is a little regression model on.We're doing the very complicated regression from inputs to outputs in a number of intermediate steps.Now, if you read the fundamental literature on this subject, you'll find that there's a proof that single hidden layer is enough and that a single hidden layer on your network an approximate any function.While that's true, in theory, there's always differences between theory and practise on what works well, empirically is not always the same thing that the theory tells us.What we find empirically, you know there was by experimentation is that having multiple hidden layers is good.It works better, so on your network is a non linear regression model.We put some representation of the input into the network.We get some representation of the output on the output layer.The activations of the output layer on what's happening in the hidden layers is some sort of learned intermediate representations.So we're trying to bridge this big gap between inputs and outputs by bridging lots of smaller gaps on these intermediate representations.I learned as part of training the model, we do not need to decide what they are or what they mean the hidden.In other words, the model's not only performing regression, it's learning how to break a complicated regression problem down into a sequence of rather simpler regressions that, when stacked together, performed the end to end regression problem.So one way to describe what this network is doing is a sequence off non linear projections, or regressions from one space to another space tow another space and eventually gets us from this linguistic space to this acoustic space.On this, in between things while some other spaces some intermediate representations on, we don't know what they are on, the network is going to learn how best to represent the problem internally.as part of training.You might compare that to a pipeline architecture that we've seen in our Texas beach front end, where we break down the very complicated problem off moving from text to linguistic features into a sequence off processes, which is normalisation or part of speech, tagging or looking things up in a dictionary.And there's lots of intermediate representations in that pipeline, which is the fanatic string or syllables or symbolic property.But those representations are handcrafted.We've had to decide what they are throughout expert knowledge.And then we've built separate models to jump from one representation to the next the neural networks a little bit like that in a very general way that it were breaking a complex problem down into a sequence of simpler problems.But here we do not need to decide what the simple problems are.We just choose how many steps there are, so there are a bunch of design parameters are on your network that we need to choose on their things, like the number of hidden layers but number of units in each hidden layer, which could vary from layer to layer on DH, the activation function that's hidden layers with the inputs and outputs being decided by, in our case, how many linguistic features we can extract from the text on DH.What promises are vocoder needs.So that's in your net.In very general terms.Now, in the next part, we're going to go and see how we use that to do texture speech.That's just going to be a matter of getting the input representation right.

This video just has a plain transcript, not time-aligned to the videoTHIS IS AN UNCORRECTED AUTOMATIC TRANSCRIPT. IT MAY BE CORRECTED LATER IF TIME PERMITS.Now we have a basic understanding of what a neural network is.It's non linear regression.Perhaps we could think of it as a little bit of a black box.For now, let's think about how to do text to speech with it.And that's just going to be a matter of taking the linguistic specification for the front end.I'm doing various reformer things and adding a little bit more information, specifically duration YL information to get it into a format that, suitable for input on your network on that form is going to be a sequence off vectors.The sequence is going to be a time sequence because speech synthesis is a sequence to sequence regression problem.So we'll start off very much like we did in hmm Bay speech emphasis by flattening the rich linguistic structures because we don't know what to do with them into a sequence.A linear sequence on that is a sequence off context dependent phones, as in the H Mints and this module.I'm going to use this H D K style notation, in fact, is from the HTS tool kit, which is commonly used for each member synthesis on DH just to remind you that's the phone on DH, These punctuation symbols separated from its context.On Dhere, we can see we've got two phones with right to phone to the left and some machine friendly but human unfriendly encoding off super segmental context.Such a syllable, structure or position in phrase.So that's what I like to call flattening the linguistic structure.But this structure here, although it has time as this access time, is measured in linguistic units on in order to turn that into speech, we need to know the duration of each of those linguistic units.So we're going to expand this description out into a sequence or vectors, which is at a fixed time scale.In other words, it's going to be the frame rate, maybe every five milliseconds.You know, there was a 200 hertz frame rate as opposed to what we see on the left, which is what a frenetic rate when you construct somehow some sequence off vectors on.There's going to be 200 per second on DH.Obviously, to do that, we need to add duration information, so we're going to have to have a duration model that helps us get from the linguistic timescale out to the fixed frame rate.I was going to first show you the kind of complete picture off doing speech synthesis, and then we'll backtrack and see how this input is actually prepared.Let's just take that as read that that is some representation ofthe DYS linguistic information here, enhanced with duration all information so expanded out to a frame rate, and it's got some sort of duration features at it.Maybe that's what these numbers here mean.I will say that fixed frame rate representation on will synthesise with it.So before we know exactly what's in it and before we know how to train them, your network, I think it's helpful just to see the process of synthesis.So we'll take that and for each frame, in turn, will push it through the neural network and generate a little bit of speech.So I'll take the first frame and we'll push it through the network, make what's called a forward pass.Let's just be clear how simple that really is.That's a question off.Writing the vector onto the input layer has its activations doing this matrix multiplication at each of the inputs to this hidden layer.Well, some all of the activations that arrived down those weighted connexions apply the nonlinear function on DH produce an output will do another matrix multiplication.Get the inputs to the next layer, apply the nonlinear function and get the activations or outputs play another matrix.Multiplication of the output layer will receive all of those activations down another set of weights and produce some output on the output Laugh.They're just the sequence off matrix multiplies on non linear operations inside the hidden layers that will give us our set of speech parameters.And again, just to be clear, I'm gonna networks only got two outputs.Of course, we'd need a lot more than that for a full vocoder specifications.And that's the speech parameters is then the input to Arvo Koda.That's this thing here on that will produce for us a little bit of speech corresponding to this frame of import.We just push those features through the yokota, which produces away form on.We'll save that for later on DH by putting subsequent frames through this one, then this one and this one, which is a whole sequence of fragments of way forms, which just joined together and play back as the speech signal.We might do some overlapping out, for example, to join them together.That's the end to end process on.What we need to explain now is how to make this stuff here.So let's go step by step through the process we're going to follow to construct this representation in front of us.This is a sequence of frames, so that's time Michigan frames.So this is frame one.This is frame, too, and so on for a sentence that we're trying to say.And so the duration of speech that we will get out is equal to the number of frames times the time interval between frames times five milliseconds.Just by glancing across these features, we can see that there's some part of it that's binary.It's some zeros with a few one sprinkled in under some part that's has continuously valued American features.So we need to explain what these are and where they come from.So the complete process for creating this input, the neural network, is to take our linguistic specifications from our from damned in the usual way.Flatten that to get our sequence of context dependent phones this thing here that we could then go off and do hmm synthesis with.But when you're in its synthesis, we need to do a little bit more specifically, we have to expand it out to a time sequence of the fixed frame rate.So we go from the linguistic timescale off the linguistic specification on the context dependent phones to a fixed frame rate on the way we do.That is by adding duration information that would have to be predicted.So we need a duration model.After applying the duration model, time is now measured a fixed frame rate.We can then expand out thes context dependent phone names into a sequence of binary feature vectors.And that's the frame sequence ready for input on your network.To improve performance, we might add further fine grain positional information, so the duration information would expand out each context dependent phone into some sequence of frames.But at that point, every individual frame would have the exact same specifications.It would seem useful to know how far through an individual phone we are while synthesising it so we can add some fine grain position information that's below the phone level, some sub genetic information.So we'll have that to the frame sequence and then input that, too.Then your network.Let's take all that of its slower to see the individual steps we shouldn't need to describe how the front end works.We've seen that picture 100 times, will then rewrite that.And this is just a question of changing format as a sequence off context dependent phones.So to get from that linguistic structure, the thing that in festival is an utterance structure to this representation is simply a reformatting.We just take a walk down the genetic sequence and attached the relevant context to the name off the phone to arrive at something that looked a bit like this.So there's several parts to this.There's this Quinn phone, the centre phone plus or minus two, and then the some super segmental stuff to do with where we are in syllables, part of speech, all sorts of things.So these are just examples of things we could use, and you could add anything else you think might be useful.Just encode it in the name of the model.These are all linguistic things.So the positional features are the positions of linguistic objects in linguistic structures, position of phoney unsellable position of sellable, inward position of word and phrase, those sorts of things.What we going to do now is step by step.Expand that into a secret of frames at a fixed frame rate.The first thing that's usually done is to expand each phone into a set of sub phones.The reason for this is really a carry over from hmm synthesis.So we're going to instead of work in terms of phones, we're going to divide them into sub phones here have divided this one into five, so this is still time.It's still measured in linguistic units.It's that these are phones on.This is a sequence of sub phones off this particular one have expanded the phone out into five sub phones.So there's the beginning is the end on There's some stuff in the middle, and it would seem reasonable that knowing where we are in that sequence will be helpful for determining the final sound off this speech unit on.The reason for using five is just copied over from hmm synthesis.So many of the first papers of the new wave of new on that base speech synthesis.Ah, lot of machinery was carried over from hmm synthesis.So these systems were built on top of hmm.System is basically so we'll see that now in the way that we divide a phone into sub phones on the sub phone is simply just a nature member state.So this is an hmm of one phone in context.That's its name.So as a model of an L in this context, and it's got five states, and so we just break the phone in tow, five sub phone units and then we're going to predict the duration at the sub genetic level.Another was going to duration per state, and that prediction is going to be in units of frames.So what is that duration model? Well, in really early work, the duration model was just the regression tree from an hmm system, so just borrowed from the system.We already know that regression trees are not the smartest model for this problem, and so we could easily replace that.For example, we could have a separate neural network whose job it was to predict your rations or we could borrowed durations from natural speech if we wanted to cheat or we could get it from any other model.We like some external duration model.So the duration model is providing the duration of each state in frames.We know the name of the model.We know the index of the state that will help was follow the tree down to a leaf.The prediction of that leaf here would be two frames on wood, right, that there were just do that for every state in our sequence.So this is the bridge between the linguistic timescale here.Sub phones on the fixed frame rate here.So let's add those durations to our picture.That's the sequence of phones.I broke it down into sub phones, and I'm now going to write durations on them those of ST Indices in h k style.So they start from two on DH.We can now put your rations on and a good way to rip situation will be start time and end time within the whole sentence.We now have what looks very much like unaligned label file, which exactly what it is.The next step is to rewrite this as binary vectors, and then we finally going to expand that out in two frames.I'm going to describe this in a way that's rather specific to a particular set of tools, in fact, to the HTS toolkit that's a widely used tool.And so it's reasonable to use its formats, which we just borrowed from H.D.K.Of course, of course, if you're not using that tool, if you have written your own tool, you might do this in a rather different way.However conceptually, we're always going to need to do something equivalent to the following.We're going to turn each of these context dependent model names.There's one of them into a feature vector.He's going to be emotionally zeros with a few ones.In other words, for each of these categorical features and all of the rest, we're going to convert it into a one hot coding.And in fact, we're just going to the processing for the entire model name all at once.We was going to turn the whole thing into a some hot coding so effective this almost all zeros, but with a few ones in on those ones are capturing the information in this model name.Here's how this particular tool does it.We write down a set of patterns which either much or don't match the model name matching will result in a feature value of one not matching will result in a feature value of zero.So these patterns are just, in fact, the same thing that we would use as possible questions.We're building a regression tree in hmm system.We're going to have a very, very long list of possible questions because we're going to write down everything we could possibly ask about this model name, and most of the questions will not match will result in the value of zero, but a few will match.Let's do that.Here's the top of my list of questions, and I'm just going to scroll through it.And for each of them, I'm going to ask the question about the model name.They're a little bit like regular expressions.I'm going to see if the model name contains this little bit of pattern.This pattern is just saying, Is the centre phone equal to that value? Most the time.The answer will be no on.All right, a zero into my feature vector.Whenever a question matches those when I find the answer is yes, then I'll write a one in my feature vector.So let's do that.We start scrolling through the list of questions saying 00000 and will eventually come to something that does match.This question here is saying, Does the sender phone equal? L And here? Of course it does.And so I write one into my binary feature vector, and I keep on strolling through the questions again.Most them don't match and I write a zero, and eventually I'll come to something else that matches.There is the question that says, Do you have a P to the left? My question matches this P that gives me another yes on the writing of the one to my feature vector so we can see in this feature vector.Here it's mostly zeros with a few ones.Those ones are the one hot coatings.This first part is the one hot coating of the centre phone and then a one hot coding of the left phone.And then we'll carry on will do the right phone the left left phone, the right right phone and all of the other stuff.It's one of the very long list of questions to do that.We're still operating after linguistic timescale.What we have is this binary vector is very sparse.Mostly, you know, just a few ones we need just now.Write it out at the fixed frame rate, and that's just a simple matter of duplicating it the right number of times for each hmm estate.So the first step, then, is to obtain this for each hmm state.Now we can add some simple position information immediately, and that is which state we were in this sequence here Is that the linguistic clock rate counting states 23456 And we now know how long we would like to spend in each state from moderation model.So just duplicate the lines that number of times so this one will just be duplicated twice.So we write them out like that on that white space doesn't really mean anything, so we'll just rewrite it like that.That's now a sequence off feature vectors at a fixed frame rate.This is time in frames.One line per frame within a single Hmm estate.Most of the vector is constant.And then we've got some position information here that we might add here have added a very simple feature, which is position within ST it says.First we halfway through and then we're all the way through.Must look at another state, this last state here.We need to be in for three time frames.That's though three frames here.So where a third of the way through two thirds of the way through all the way through very simple positional feature is a little counter that counts our way through the state.What this represents, then this part here, the sequence of frames.It says This isn't L.That's encoded somehow in one of these one hot things in the context, so and so that's encouraging all the other one hot stuff.Exaggeration of 10 frames, you can count the line.There are 10 of them on DH.We've got little counters, little clocks ticking away at different rates.This one's going around quite rapidly.This one's counting through states on, we could add any other counters we like.We'll see in the real example that we might have other counters that count backwards.For example,

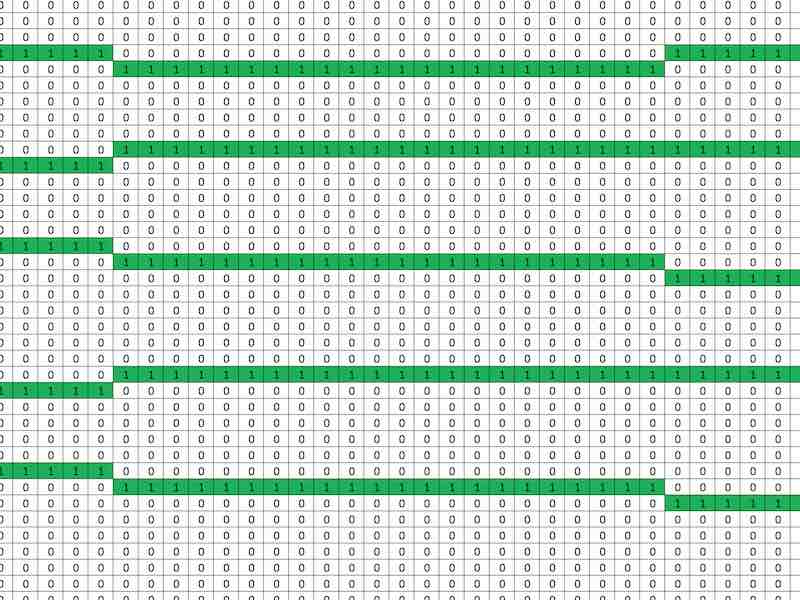

Here are the input features for one sentence for a frame-by-frame model (in spreadsheet format for convenience).

This video just has a plain transcript, not time-aligned to the videoTHIS IS AN UNCORRECTED AUTOMATIC TRANSCRIPT. IT MAY BE CORRECTED LATER IF TIME PERMITS.So I just finished with a rather informal description of how to train in your network just to show that it's fairly straightforward.It's a very simple algorithm now, like all simple algorithms, weaken.Dress it up in all sorts of other enhancements on you'll find when you start reading the literature that that's what happens.But in principle is pretty simple.So we're going to give an impressionistic hand waving our non mathematical introduction to how you train a neural network.We're going to use supervised machine learning.In other words, we know the inputs on the outputs on the imports now, but they're going to be aligned.So we're going to have to sequence is a sequence of inputs on the sequence of outputs.They're both at the same frame rate, and there's a correspondence between one frame of input on one frame of output on DH.Because my in your network in my pictures is rather small and simple.My imports have got three dimensions.My outpost got two dimensions, of course, in real speaks and assess.This is to do with vocoder parameters, and this is to do with linguistic features we saw in the previous section how to prepare the input to how to get our sequence off vectors, which emotionally binary ones and zeroes with a few numerical positional features attached to them.Little counters account through states or count through phones we could are counting through any other unit we liked.Syllables, words, phrases on the outpost Adjust the vocoder parameters.Just speech features.Now the Sioux sequences have to be aligned.How'd you get that alignment? Well, you actually know the answer to that.We could do that with forced alignment in precisely the same way that you have prepared data for a unit selection.Synthesiser.In other words, we prepare aligned label files with duration information attached to them.Where that duration information didn't come from the model that predicts duration.It came from natural speech from the training data through forced alignment so we can get aligned.Pairs off input and output vectors is an example of what the data might look like to train in your network.We've got some input, not some output.This is time in frames on.These things are aligned.So we know that when we put this into the network, we would like to get that out of the network.So that's the job of the network is to learn that regression.These features are all from recorded natural speech.So these are the speech features extracted from natural speech, and these are the linguistic features for the transcription of that speech.Have bean time aligned through forced alignment.So we've prepared our data on now will train our network, and I'll use my really simple small network for the example.So our imports are going to be of dimensionality three and the outputs or dimensionality too.So I wonder this is going to be rather impressionistic.We're not going to even show a single equation.The job of the network is to learn when I show it this import, it should give me this output.So the target output is an example from natural speech.So this isn't aligned.Pair of input and output Feature vectors typically will initialise in your network by just letting all the ways to random values.When we input this feature, it goes through this weight matrix which just some random linear projection.To start with the hidden layer, apply some nonlinear function to it, which was a sigmoid on DH.We get another projection, another protection when we get some output, which is just the projection off the import through those layers.So let's see where maybe get the following value and here we might get now.We wouldn't expect the network to get the right answer because it's waits around them on the learning algorithm, then is just going to gradually adjust the weights to make the output as close as possible to the target.That learning algorithm is called back propagation on DH Impressionistic Lee.It does the following We looked at the output we would like to get.We looked at the output that we got when we compute some error between those two.So here the output was higher than it should have bean.So the Somme era here the air is about 0.3.So we need to adjust the weights in a way that makes this out put a little bit smaller.So we take the era and we send it back through the network and the central messages.Colonel, the weights and these messages here are going to say you gave me now, but it was too big.I want to not put little bit smaller from that, So could you just scale down your weight a little bit? All those ways will get a little bit reduced to be an error.Function also propagated back through this unit through these ways, and there's the little messages saying how they should be adjusted as well.On the back propagation algorithm can then take these areas that all arrive the outputs of thes units.So we're going to some of the errors that arrive here.We're going to send it backwards through the activation function on DH Central messages down all of these weights as well, saying whether they should be increased a little bit or decreased a little bit.We'll have computed how much the weight you need to be changed, and then we'll update the weights and we'll get a network which next time should give us something closer to the right output.So maybe we update our weights and put the vector back to the network, and now maybe we'll get the following outputs so a little bit closer to the correct values we'll just do the same again will compute the errors between what the network gave us and what we wanted.And we'll use that era as a signal to send back through the network to back propagate, and it will tell the weights where they need to increase or decrease.Now, of course, we won't just do that for a single import on a single target will do it for the entire training centre so we'll have thousands or millions of pairs of inputs and outputs for each input will put it through the network.Compute network output on the era.We'll do that across the entire training set to find the average era, and then we'll back propagate through the weights to do an update.And they will iterated that will make many, many passes through the data.Make many, many updates of the weights on will gradually converge on an ideal set of weights that gives us out.But that was close.Is there going to be to the target now that training algorithm, as I've described it, is extremely naive.It suggests that we need to make a pass through the entire training data before we can even update the weights.Once in practise, we don't do that.We'll do it in what it called mini Batch is so will make weight updates a lot more frequently, so we'll be able to get the network trained a lot quicker on there's lots and lots of other tricks to get this network to converge on the best possible set of weights.And I'm deliberately not going to cover all of those here because that's an ever changing field on.You need to read the literature to find out what people are doing today when their training your networks for speech synthesis.Likewise, this very simple feed forward architecture is about the simplest neural network we could draw on DH people using far more sophisticated architectures.They're expressing something that they believe about the problem just to give you one example of the sorts of things you could do to make a more complicated architecture.You could choose not to fully connect two layers but have a very specific pattern of connexions that expresses something you think you know about the problem.That's all I would like to say about training your networks because the rest is much better done from readings.Let's finish off by seeing where we are in the big picture on what's coming up.So I've only told you about the very simply sort of.Neural networks feed forward architectures on DH The way I've described that is just a straight swap for the regression tree on.We saw that basically a hidden Markov model is still involved.For example, is there for force alignment of the training data on DH? When we do synthesis, it's there as a sort of sub genetic clock, it says.Phones are too coarse in granularity.We need to divide them into five little pieces.And for each of those five pieces, we need to attach duration information on some positional features, which is how far through the phone you are in terms of states.And then use that to construct the input of the neural network in early work on the network's piece emphasis in this rediscovery of new or nets for speech synthesis.A lot of things were borrowed from hmm systems, including the duration model, even though it is a rather poor regression tree based model.Later work tends to use a much better duration model.I wonder what that might be.Well, it's just another neural network, so a typical New York speak.Synthesise will have one year on that prediction.Duration, and then we'll use those durations and feed them into a second year old network, which does the regression onto speech parameters.Well, what's coming next in neural networks would speak.Synthesis depends entirely on when you're watching this video, because the literature is changing very quickly on this topic.And so from this point onwards, I'm not even going to attempt to give you videos about the state of the art in your network's piece synthesis.You're going to go and read the current literature to find that out.We can make use of our neural network speech synthesiser in a unit selection system, and so will come full circle on DH.Finish off in the next module, looking at hybrid synthesis.And I'll describe that hours unit selection using an acoustic space formulation target cost function.And it's worth pointing out that the better the regression model we get from your network speech emphasis, the better.We expect hybrid synthesis toe work.So any developments that we learn about from the literature on neural network based speech synthesis in a statistical parametric framework, in the words driving a vocoder.We could take those developments and put them into a hybrid system and use it to drive unit selection system.But we expect those improvements to carry through to better synthesis in the hybrid framework.

The essential readings are concerned with speech synthesis. If you first need some help understanding the basic ideas of Neural Networks, try one or other of the recommended readings. Both of those are complete, but short, books. Use your skim-reading skills to locate the most important parts.

Reading

Zen et al: Statistical parametric speech synthesis using deep neural networks

The first paper that re-introduced the use of (Deep) Neural Networks in speech synthesis.

Ling et al: Deep Learning for Acoustic Modeling in Parametric Speech Generation

A key review article.

Nielsen: Neural Networks and Deep Learning

A great introduction. Relatively light on maths, and with some interactive explanations.

Gurney: An introduction to neural networks

Somewhat old, but might be helpful in getting some of the basic concepts clear, if you find Nielsen's "Neural Networks and Deep Learning" too difficult to start with.

Wu et al. Merlin: An Open Source Neural Network Speech Synthesis System

Merlin is a toolkit for building Deep Neural Network models for statistical parametric speech synthesis. It is a typical frame-by-frame approach, pre-dating sequence-to-sequence models.

Wu et al: Deep neural networks employing Multi-Task Learning…

Some straightforward, but effective techniques to improve the performance of speech synthesis using simple feedforward networks.

Watts et al: From HMMs to DNNs: where do the improvements come from?

Measures the relative contributions of the key differences in the regression model, state vs. frame predictions, and separate vs. combined stream predictions.

Download the slides for the class on 2024-03-12 (postponed from 2024-03-05)

Download some bonus slides which provide a derivation of back-propagation (non-examinable)

To reveal an answer, click a flashcard.

As a warm-up for the next module, here is an optional additional video to watch.

Download the slides for this video

Total video to watch in this section: 64 minutes

That was just a rather lightweight introduction. We deliberately kept things really simple and used the most basic type of neural network. The type of models covered in this module are already dated, but that’s OK! You need to understand the fundamentals before attempting to understand more advanced methods.

For a more practically-oriented description of the frame-by-frame approach, there is an Interspeech tutorial from 2017. This would be helpful if you want to do some practical work (beyond the scope of this course) with this approach, possibly as a precursor to moving on to state-of-the-art models.