We can combine probabilistic models of our prior beliefs, and of the signal being classified.

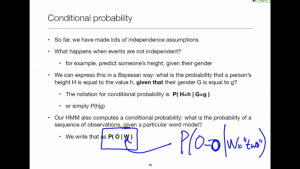

Conditional probability

It's time to get more careful with our notation, and state that the observations from an HMM are independent, given the model that generated them.

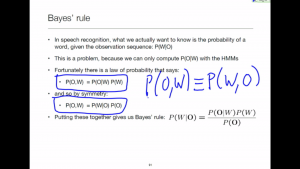

Bayes' rule

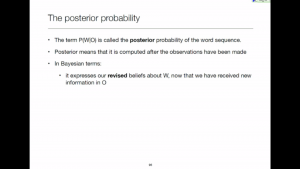

Now we have correctly stated that the HMM computes P(O|W), we realise that actually we need to compute P(W|O). Bayes' rule comes to the rescue.

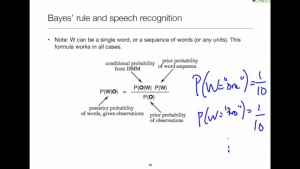

Bayes' rule: P(O)

P(O) is the probability of the observation sequence, but not conditioned on any model. How on earth are we going to compute that quantity without a model?

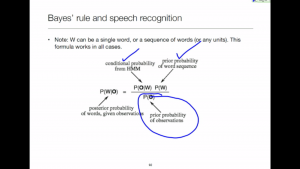

Bayes' rule: revising our prior beliefs

We elegantly combine prior beliefs with new evidence simply by multiplying probabilities.