- This topic has 1 reply, 2 voices, and was last updated 9 years, 5 months ago by .

Viewing 1 reply thread

Viewing 1 reply thread

- You must be logged in to reply to this topic.

› Forums › Automatic speech recognition › Dynamic Time Warping (DTW) › Weighting diagonal steps differently

In the slides you state that the ‘Number of local distances summed is path dependent, since paths vary in their length’, and that the solution to this is to ‘weight diagonal steps differently to horizontal or vertical ones’.

Could you explain this point in more detail since I don’t quite see how one thing follows from the other?

Thank you!

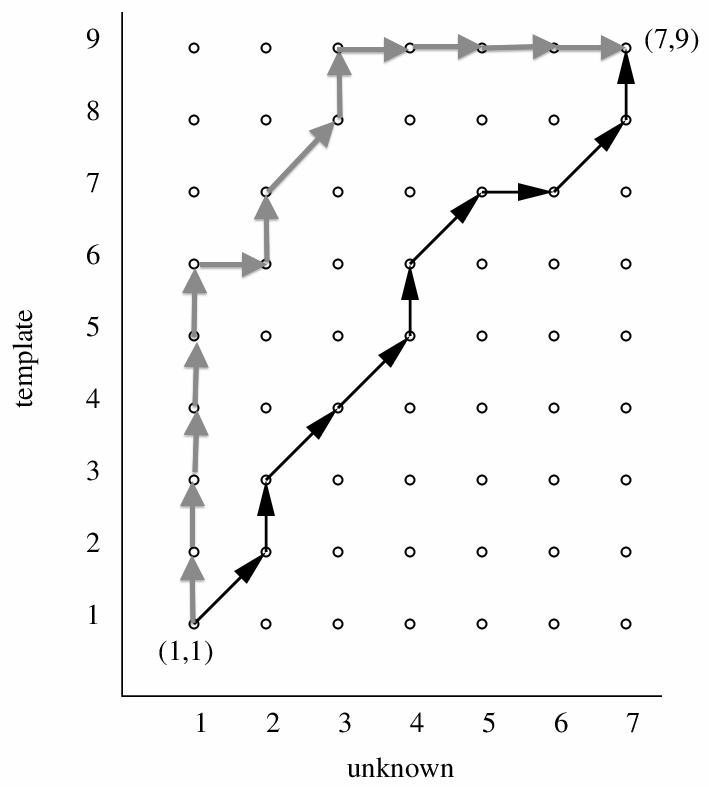

Think about the grid, which is the data structure used for Dynamic Time Warping. Paths from one corner to the diagonally-opposite corner must pass through the points on the grid, summing up local distances as they go. Paths close to the main diagonal generally pass through fewer points in total than paths that stray far away from the main diagonal.

You can see in the diagram above how the two paths differ in the number of local distances that they must sum up. This leads to a bias in favour of paths that stay close the the main diagonal.

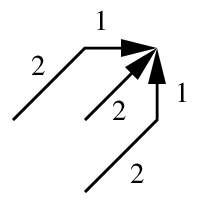

To reduce this bias, lots of solutions were proposed back at the time when DTW was the state-of-the-art. One is to penalise diagonal paths (e.g., add a penalty cost to the distance-so-far every time a diagonal move is made). One popular method was to impose local constraints, such as in this diagram (the numbers are weights or penalty terms):

Is this still important?

For automatic speech recognition, this is all outdated and no longer important. But there is a general lesson that might apply to other applications of dynamic programming: look for biases towards certain solutions, and ask whether that needs to be compensated for.

Some forums are only available if you are logged in. Searching will only return results from those forums if you log in.

This is the new version. Still under construction.

This is the new version. Still under construction.Copyright © 2025 · Balance Child Theme on Genesis Framework · WordPress · Log in