Only an outline of the main approaches, with little technical detail. Useful as a summary of why these tasks are harder than you might think.

King: Measuring a decade of progress in Text-to-Speech

A distillation of the key findings of the first 10 years of the Blizzard Challenge.

Kawahara et al: Restructuring speech representations…

The key paper about the STRAIGHT vocoder, which was originally intended for manipulating recorded natural speech.

Talkin: A Robust Algorithm for Pitch Tracking (RAPT)

The classic algorithm for estimating F0 from speech signals.

Clark et al: Statistical analysis of the Blizzard Challenge 2007 listening test results

Explains the types of statistical tests that are employed in the Blizzard Challenge. These are deliberately quite conservative. For example, MOS data is correctly treated as ordinal. Also includes a Multi-Dimensional Scaling (MDS) section that is not as widely used as the other types of analysis.

Norrenbrock et al: Quality prediction of synthesised speech…

Although standard speech quality measures such as PESQ do not work well for synthetic speech, specially constructed methods do work to some extent.

Mayo et al: Multidimensional scaling of listener responses to synthetic speech

Multi-dimensional scaling is a way to uncover the different perceptual dimensions that listeners use, when rating synthetic speech.

Benoît et al: The SUS test

A method for evaluating the intelligibility of synthetic speech, which avoids the ceiling effect.

Bennett: Large Scale Evaluation of Corpus-based Synthesisers

An analysis of the first Blizzard Challenge, which is an evaluation of speech synthesisers using a common database.

Fitt & Isard: Synthesis of regional English using a keyword lexicon

An extension and practical application of Wells’ keyvowels idea, which enables efficient generation of a pronunciation dictionary tailored to a specific accent or speaker.

Kominek & Black: CMU ARCTIC databases for speech synthesis

Widely used, copyright-free speech databases for use in speech synthesis

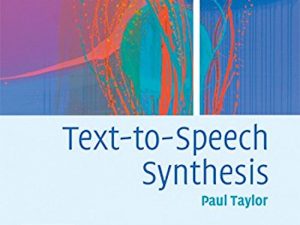

Taylor – Section 17.2 – Evaluation

Testing of the system by the developers, as well as via listening tests.

This is the new version. Still under construction.

This is the new version. Still under construction.